Automatic quality assessment of articles in multilingual Wikipedia and identification of its important information sources on various topics

Wikipedia, as the largest and most popular open-access online encyclopedia, plays an important role in global access to knowledge and information. This platform offers quick access to a huge amount of information on almost any topic, making it a valuable resource for students, teachers and researchers. Wikipedia enables free access to information for people from various backgrounds and regions of the world, contributing to blurring the differences in access to knowledge. Currently, it has over 62 million articles in over 300 languages.

Wikipedia’s freedom to edit is both its great asset and its challenge. While the freedom to add and modify articles in this encyclopedia enables the democratization of access to knowledge and supports global cooperation, it also requires effective quality control and moderation mechanisms. The freedom to edit Wikipedia allows everyone, regardless of education level or social position, to contribute to building and developing a publicly available body of knowledge. This allows for broad access to creating and sharing information. Compared to traditional encyclopedias, Wikipedia can be updated almost immediately when new information or events appear. However, it should also be taken into account that this freedom to edit Wikipedia may lead to the intentional introduction of false information, removal of valuable content or other forms of vandalism, which undermines the credibility and quality of the encyclopedia. Furthermore, different viewpoints and beliefs of editors may lead to bias in articles, which may affect the neutrality and objectivity of the information presented. Additionally, frequent editing and revisions can lead to excessive variability in some articles, making it difficult to maintain the consistency and quality of information. Therefore, ensuring high quality of all articles in different languages in the face of editing freedom is a significant challenge.

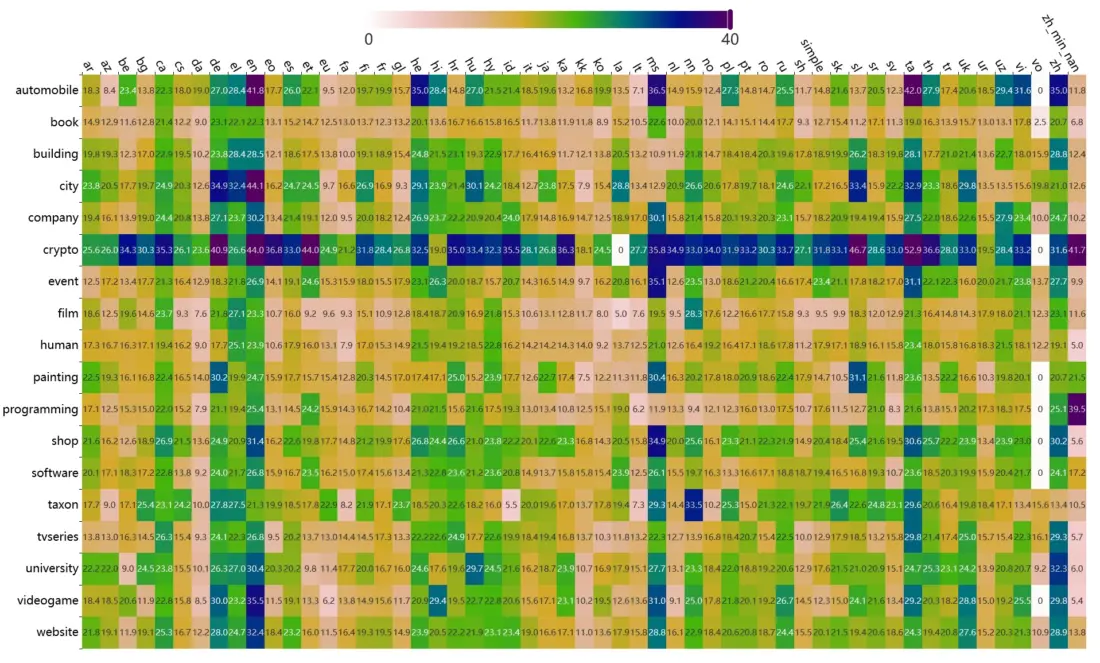

During the seminar, Dr. Włodzimierz Lewoniewski discussed the methods and tools used to analyze and evaluate content in a popular multilingual encyclopedia and ways of identifying and assessing information sources. The Department of Information Systems conducts research in the area of creating models for automatic assessment of the quality of Wikipedia articles in various languages. Hundreds of measures have been developed as part of this research. Some of them have been implemented in the WikiRank tool, which allows for quality assessment using a synthetic quality measure on a continuous scale from 0 to 100. The figure below shows one of the charts presented during the seminar, which shows the average quality values of Wikipedia articles in different languages and topics using this measure (data as of February 2024, an interactive version of this chart is also available):

Scientific works also focus on analyzing and assessing the reliability of information sources that are cited in Wikipedia articles. There are now over 330 million footnotes (links or references) to a variety of sources, making it possible to assess the importance of individual websites as information providers. The BestRef tool is one example of systems that analyze and assess the millions of websites used as sources of information on Wikipedia.

Automatic evaluation of articles on Wikipedia aims to determine to what extent the content of these articles meets various quality criteria, such as completeness, objectivity, topicality, reliability of cited sources, and stylistic correctness. This is especially important given the multilingual nature of Wikipedia, where linguistic and cultural differences introduce additional challenges. Machine learning techniques, including supervised and unsupervised classification, are used to detect qualitative patterns based on previously labeled examples.

Projects such as DBpedia and Wikidata, which are open semantic databases, play a key role in the ecosystem of open data and the Semantic Internet. They facilitate access to large sets of information in an organized and semantically coherent way, making them invaluable resources for researchers and scientists. They enable advanced analysis in a variety of fields, from social sciences to medicine, opening up new opportunities for research and innovation. Like Wikipedia, they support data processing in multiple languages, which is crucial for global access to information.

Improving the quality of Wikipedia may also impact the quality of other popular online services and tools. For example, Internet search engines such as Google and Bing use information from Wikipedia to create “knowledge boxes” that provide users with short summaries and key information about search terms. These summaries are often based on content from Wikipedia, offering quick access to condensed information. Additionally, generative AI tools like ChatGPT use Wikipedia data in their learning processes, allowing them to generate more accurate and diverse content.

The seminar of the Institute of Informatics and Quantitative Economics took place on February 16, 2024.